Taren Patel

American Sign Language Translating Glove

I attended and received an honorable mention at the Synopsys Science fair this year in the physical science and engineering category, where I created an American Sign Language translating glove. I created a glove that contains a variety of sensors to transmit hand data to a Raspberry pi. I used python to process and analyze the data, and using Scikit-Learn, I created a machine learning model to predict and translate ASL words and phrases into English speech. This project includes a database to store all the ASL gestures, and the machine learning algorithm builds a model from these gestures.

Description

How can we break the barrier between the deaf and non-deaf communities and make it easier for them to communicate with each other? I first started out with some requirements for this project. The project needs to have a database of gestures that it’s able to translate. The project needs to have an accuracy of greater than 85%. It needs to be able to translate ASL to speech and project it over a speaker. Lastly, the project needs to be mobile and travel with the person.

There are many ways that I could’ve gone about this problem, but I decided to look online for the most prominent solutions. This problem isn’t new, and there are many solutions on the internet, but as an engineer, my goal isn’t to create something new but to modify an existing solution to make it better. The main solution to this problem is a glove that has sensors to track the position of each finger and the orientation of the hand. The problem with these gloves is that they can’t translate words or phrases, so I decided to tackle that aspect. I also saw a hindrance when it came to the number of gestures these gloves could translate. For every new gesture the creator wanted to add to the capability of the glove, they would have to program it directly into the code. I planned to solve these issues through machine learning which I will talk about a little later, but machine learning allows me to be able to translate words and phrases, and it will allow me to easily add new gestures to the capabilities of the glove. If I create a database to store all of these gesture translations, I will easily be able to implement a new translation into the glove.

To start, I decided to use 5 flex sensors to track the bend of each finger. I used an Arduino rp2040 to read all the sensor data and communicate that data to a computer. The Arduino contains a gyroscope and acceleration sensor which allowed me to track the orientation and state of the hand. I then created basic scripts that could use randomly generated data points to test the machine learning capabilities and database utilization. I used randomly generated data because I hadn’t finished creating the glove yet, but once I finished the glove, I rewrote the code to use the sensor data instead. Here is a demonstration of the glove in action.

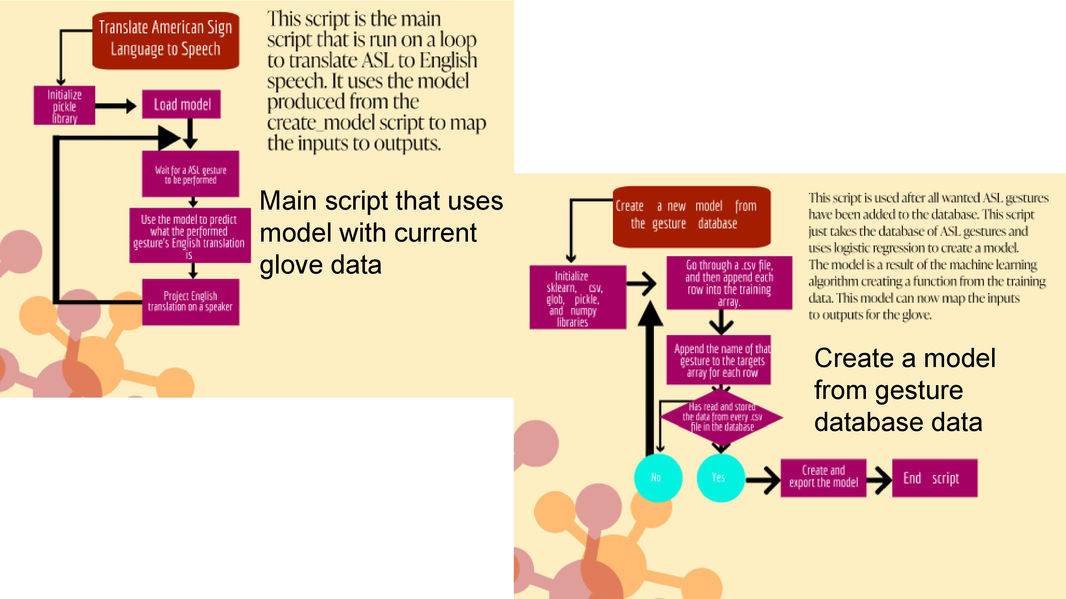

This is a brief summary of the machine learning implementation. When adding a new gesture to the database, I store it as a matrix. Across the columns are different sensors, and down the rows are different timestamps. So as I record a new gesture for the database, it constantly appends new rows of sensor values until I say that the gesture has been fully performed. The machine learning algorithm takes all recorded gestures from the database, each with its own matrix, and appends it to an array called data and its translation, as a string, into an array called targets. So the first element in the data array would correspond with its name in the targets array. I then split the data in the data array into training and testing sets. The algorithm produces a logistic regression function using all of the data in the training set, and it automatically inputs the test set values into the created function to see how accurate the created function is. This function that is created by this process is to be used for real-time translations.

I performed some tests to see how I can improve prediction accuracy, and I learned that adding more data for each translation to the database helps. I originally used 2 sets of data per translation, but after changing it to 5 sets of data, the machine learning understood the gesture better. When testing with the glove, I would run the script which creates the function, observe if the output prediction accuracy is greater than 90%, then test the accuracy myself using the glove. This process allowed me to tell if the function is any good, and if it isn’t I would have to change some variables to correct it.

Here are some graphs of sensor data to show the distinctions and characteristics of different gestures per sensor. As you can see, there are distinguishable data points for each gesture, except for hello and goodbye. These data points blend together because of the similarity of their gestures, but if I show you the gyroscopic and accelerometric sensor data it might be easier to tell them apart. The machine learning algorithm takes all of these graphs and finds discrepancies and certain characteristics of data points that set each of the gestures apart from each other so it can create a function that will output an accurate classification.

In the end, the accuracy of my function is 99%. I also created a database to store all of the gestures, but it is local on my computer. In the future, I want to make it a cloud database because that would allow for multiple gloves to access these gestures. My initial goal was to have a function accuracy of greater than 85%, but seeing the capabilities and accuracy of my glove, I raised that requirement to 90%.

Just to conclude my presentation, I wanted to share how astonished I was at this final product. It exceeded my expectations when it came to prediction accuracy, database storage, and ease of use. With my database, this glove is able to have an exponentially growing amount of gestures in its dictionary as more and more people are able to add to it. This large amount of translations will allow for real-world applications, and I believe that this proof of concept will be the future of ASL translating devices.